Overview

In this unit, we’ll be giving big motivations for Unit 4 by doing 2 things:

- Showing Big-Oh, Omega, Theta terminology. (Asymptotic Notation)

- Bounding recurrences and general functions.

- Showing how induction is used in the context of computer science.

Think of this as a big culmination of the reason we learned logic, proofs, induction, and logical statements. After this point, we will be focusing on a different branch of topics.

Asymptotic Notation

Some Motivating Scenarios

Let’s begin with an example. Let’s say that we wrote this python program that just counts how many even numbers there are.

def count_even(arr):

count = 0

for x in arr:

if x % 2 == 0:

count += 1

return countHow long does this program take?

You could perhaps plot a graph, where the x-axis is the size of the array. And the y-axis is how long it takes for this program to run. We could also write similar C++ and maybe even Java code that all does something like this.

And let’s pretend that we ran some experiments, and plotted some graphs to find out how long they take. Then maybe we’d get something like this:

Notice they all kind of look like straight lines. Like linear functions. Why? Because intuitively, no matter the programming language the 3 of them would be following the same idea: Start from the first item, go through each item until the last, and for each of them, we do a check to see if it’s even or not. In some sense, if we had items, the for loop just goes through all of them.

Depending on the programming language (and even computer hardware), our total time taken might not the same, but we can expect the same linear trend between the time taken, and the array size. We want a say to basically say “no matter the programming language, we expect to see the same linear trend”.

This does not just apply to different programming languages, but even different approaches to solve the same computational problem.

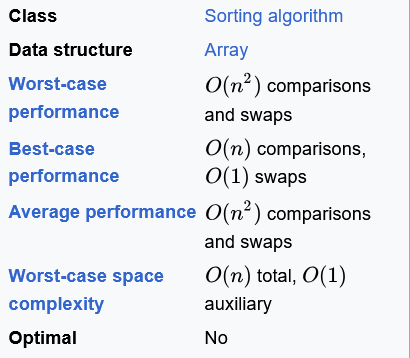

Here’s another example, let’s say we wanted to sort numbers in an array. There are many ways of doing this, let’s look at 2 possible ways:

def sorter1(arr):

for index1 in range(1, len(arr)):

index2 = index1

while index2 > 0 and arr[index2] < arr[index2 - 1]:

arr[index2], arr[index2 - 1] = arr[index2 - 1], arr[index2]

index2 -= 1

def sorter2(arr):

for index1 in range(len(arr) - 1):

min_index = index1

for index2 in range(index1, len(arr)):

if arr[min_indx] < arr[index2]:

min_index = index2

arr[min_index], arr[index1] = arr[min_index], arr[index1]Here’s an idea about how these 2 sorting methods work. The first one basically tries to move the element backwards until . After that, we rinse and repeat with .

For example, let’s say that we’re on the 4th element, we iterate backwards and keep swapping until the element is greater than the element on its left, which only happens when it’s the second element on the array.

How many steps does the program take? Well the inner loop takes at most many iterations, and ranges from to . So sorter1 uses at most iterations at most.

The second one, on the other hand, repeatedly tries to find the minimum element, before placing it in the correct location.

How many iterations does this take now? For index1, we need to check values. This means the total iterations are .

Okay, so both methods have iterations. But what they do each iteration is not the same! The first one does some swapping, the second one does comparisons and only swaps towards the end. It would be reasonable to think that because of that, even though the number of iterations are the same, the total time taken would be different. Again, a hypothetical plot you might see if we took some experimental values might look something like this:

We might expect both sorters to have a quadratic relationship between the time taken vs. the array size. So the curves might look like for some constants .

In both scenarios we’ve talked about, bear in mind that what we care about is the “rough trend” of running time, as approaches infinity. In other words, as grows, what kind of curve does the running time look like? That is, how much work is done, relative to the input size . Think about this as a form of “scaling”.

So for example, let’s say we had two programs, one of which whose time taken curve is , and another program whose time taken curve is . Which program is more “efficient”? Typically we want to design solutions that scale well, and that means solutions that handle large scale inputs very well. For that reason, we would like to say the second program is better. But how do we communicate this fact?

Big notation

For that reason, it is very common for computer scientists to talk about certain functions using Big notation. (Sometimes referred to as big Oh notation.) Here’s how it works, given a function , then the set contains all functions such that:

(Think of as the set of positive numbers. I.e. anything greater than .)

Also, we will only ever consider functions that are always positive. I.e. .

So for example, if , then the set . And this set contains functions like , or functions like , . On the other hand, functions like are not in the set .

Why is this the case? Let’s run through the examples.

For a function like , how do we argue that ? We need to provide a value and a positive constant . How about , and . Then and .

What about something like , how do we argue that ? We need to provide a value and a positive constant . How about , and . Then and .

Lastly, consider . We have that . So setting and would help us justify this.

What about ? Why is that function not in ? Remember, to not be in the set means the negation of the condition. I.e.

So how do we do this? We need to show that no matter the starting point , and the multiplier , at some point beyond , we will find some value for which . Consider any , and any positive value . Then we will pick , and claim that for this , .

Some visual intuition behind Big O

So what’s going on here? Let’s try plotting out some example graphs.

Here, the red curve is , the green curve is , the blue curve is , and the purple curve is . Here’s the idea: no matter the gradient of a linear curve, no matter how large, there will always be a point where the quadratic curve will be larger than it after some point.

So let’s look at the definition of big O again, and see how it lines up.

The here is the point at which starts being larger. But what about the scaling factor ? Here’s the idea, let’s say I had 3 functions and and . All of them actually scale the same way: they’re all linearly related to .

So you’re free to pick a scaling factor to say something like .

So you can think of as basically saying ” as a function at some point onwards is going to be the function some positive multiple of function “.

Big O in the Wild

In fact, big O notation is actively used in the wild. For example, the containers provided by the standard template library by C++ come with documentation on their complexity. This is how C++ tells you how the operations/methods of their containers scale with the size of the containers itself.

![[cppref-screenshot.png|]] (Screenshot taken from cppreference)

Even wiki pages that discuss algorithms and data structures use this notation.

Big Omega

As previously mentioned, think of as our way of saying a function is “at most” function . There are a few other notations that are less common, but still worth mentioning.

Given a function , then the set (pronounced “Big Omega”) contains all functions such that:

Notice that all we have done is flipped the into a . So big Omega is basically saying that a function is “at least” function .

So along those lines, let’s look at some examples:

We can say something like , we can also say something like . On the other hand, we cannot say something like .

Big Theta

Lastly, given a function , the set is defined to be the set of all functions such that and . In particular:

Some properties about asymptotic notation

The asymptotics of polynomials

Now that we have these sets that talk about functions, let’s cover some useful properties about them.

Let’s start with a function like . Can we say this is ? After all, intuitively, for large enough , the dominant term is here, and the smaller terms like and start to become insignificant in comparison.

So given a polynomial like the one above, we can find the most dominant term (the one with the highest power), and then drop any constant factors that it comes with, then conclude it is big O of that function. Like in our example above, we identify the , and drop the constant factor, thereby concluding the function is in .

Claim

Given a degree polynomial where , then .

Why is this true? Here’s a sketch of the math behind it, we are going to show that from onwards, for some constant .

Proof:

- Consider a degree polynomial:

-

- So setting and , we are guaranteed that . Therefore,

In fact, perhaps a little unintuitively, we can also say the following:

Claim

Given a degree polynomial where , then .

Why is this true? We are now saying that the function grows at least as the rate of its highest term. Which intuitively still makes sense, since as tends to , the function is still dominated by the term. But how do we prove this? It may be tempting to prove this in the following way:

Faulty Proof:

- Consider a degree polynomial:

-

- Let , , then

Where did we go wrong? Why is this faulty? Hint: Is line 2 correct?

Answer

For example, consider . Can we really say for ? No we can’t!

So instead, we’re going to prove a useful lemma first before proving the original statement properly.

Lemma: Let where , and . Then for all , . In other words, is greater than or equals to .

So what does this mean? Let’s try working out an example being seeing the general idea:

Consider a polynomial like . We want to say that there is some and some positive such that for . So how do we do this? We’ll split into three parts and rearrange the sum:

Now we know that from the previous part, as long as is large enough, we know that , and . In particular, the previous lemma proved that as long as , then we can say that:

So what about in general when we have a degree polynomial?

Proof:

- Let be a degree polynomial with

- Then by setting , we know that is non-negative, so:

- Since and , we have that . So setting and , we are able to conclude that

Now from the previous 2 parts, we know that and . So we can conclude that .

Claim

Given a degree polynomial where , then .

The transitivity of Big O

Let’s say that we relate function to function if . Then this relationship is transitive.

Proof:

- Let be functions.

- Assume that and .

- Since , such that ,

- Since , such that ,

- Consider , and .

- Since and , therefore

- Consider any . Then and .

- such that for all

- If and then

The asymptotics of other functions

Now that we have established transitivity, we can start comparing functions quite easily. In fact, this means we can start placing functions down in some kind of “ordering”. Let’s show how some common functions are ordered among each other. From “smaller” to “larger” function, we have that:

Okay, that’s a very long list. We aren’t going to prove all of this, but it’s hopefully most of these are very intuitive. However, let’s take a closer look at the following:

Claim

but

This might look a little unintuitive at first. After all, isn’t ?

Let’s prove the first part first.

Proof:

- Consider , and . Consider any :

- Thus,

Well that was pretty straightforward. But what about the other direction? We’ll show no matter the and someone picks, we can find a value for which . No matter the multiplier, the function will overtake at some point.

Proof:

- Let and arbitrarily chosen.

- Set .

- Then

Big O vs Big Omega

One last thing for us to think about: If , can we also say ? In fact, we can!

Claim

If , then .

Proof:

- Assume

- Then such that ,

- Since , .

- Thus, ,

- So such that ,

- Thus,

Recurrences and Big O: The Substitution Method

Now that we’ve talked about Big O, let’s try relating it back to program analysis. There are quite recurrences that we might encounter when writing recursive programs. For example, here’s a recursive program that computes :

def factorial(n):

if n == 1:

return 1

return n * factorial(n - 1)For example, we want to say that this programs running time has a linear running time with respect to . How do we even do that?

If we ever want to analyse this, there are 2 parts to it:

- We need to look at the program and derive the recurrence that corresponds to the program.

- We need to bound the recurrence.

While we won’t do much of part 1 here, we will be talking more about part 2. But for this example specifically, when , there is work being done: the comparison, returning a value. When , the total work done is work being done. Why? Because we need to do amount of work to solve , and an additional amount of work to multiply the answer by , and return it.

So, we obtain this recurrence:

Okay, now we need to bound it. In this case we will prove that . How do we do this? This is done via the substitution method.

Let’s look at the proof before pointing out the features it has. To prove that , we need to show that for some constant .

Proof Let , assume that , . Then:

This proof probably looks very different from the ones we have done so far. Here are the important steps:

- We first take the in the definition of , and just re-write this as . This is very informal, but this is a simplification that just makes it easier for us to do the proof. This is why you see it written as in the first line.

- We typically have to make use of our assumption to replace any recurrent terms. For example, is replaced by due to our assumption.

- Since we wanted to show that , the assumption made was that .

- Lastly, we need to conclude for the exact same statement as our assumption: We assumed , so we have to conclude that .

Notice here that while this looks like a proof of induction, we don’t have a base case, and there is a reason for this: We are just trying to prove that . Since this means we only care about showing that for some onwards (where are of our choosing), this technically means we can set what we want to be our base case, and artificially choose constants for which the base case is always true. So there’s really no point in covering the base case for the substitution method. This makes it a very quick and easy tool to bound recurrences.

A negative example of the method

Now, before showing you a few more positive examples, let’s go through a negative example of the substitution method.

Let’s say we were given the following recurrence:

Let’s say we suspect that . Given that, again, we are going to let and assume that for all , .

Then: Faulty Proof: Let , assume that , . Then:

Okay, this proof looks harmless enough. Where did we go wrong?

Hint

Does the conclusion look right to you?

Answer

We have to conclude that , not conclude that .

Remember, we made an assumption that for all . To fulfill our end of the deal, we need to prove that . If we had instead shown that , we are falling shy of that goal.

How do we fix this proof? Here’s an idea, instead of assuming , we are going to assume that , for all . This might seem counter-intuitive, but it works. After all, if we can conclude that for all , , then it holds that , which then means that .

Proof: Let , assume that , . Then:

Et voila! We are done. Again, notice how we assumed , so our conclusion has to be .

A few more involved examples

Let’s do 2 more examples demonstrating this method.

Example 1:

Let be a function such that:

Then to show that :

Proof: Let . For all , assume that . Then:

Example 2:

Then to show that :

Proof: Let . For all , assume that . Then:

Program Correctness Via Induction

We will end this unit on a technique for proving that programs are correct via induction. We will not go through very complicated programs, but it would be great to cover some to demonstrate this idea. The section is really a combination of both programming and math, which really is what computer science is all about sometimes.

Let’s look at this python program that adds up all the elements in an array.

def adder(arr):

total = 0

for i in range(len(arr)):

total += arr[i]

return total Why is this correct? I mean perhaps it’s obvious, we’re just adding up everything in an array. But we’re going to use this simple example so show you how we can actually prove programs are correct via induction. Of course, no sane programmer actually does this for simple programs, but if we were to use an actually complicated program right now, this might be too difficult.

Here’s the idea, we want to say that:

- The program is correct before the iteration.

- For from onwards, if before the iteration, the program was “correct”, after the iteration, it is also correct.

Does this look familiar? It does look like our induction base case and inductive cases! And again, we are going to look at proof formats that closely mimic this.

We wish to claim the following:

At the start of the iteration (where starts from ),

totalis the sum of elements in the sub-arrayarr[0..(i - 1)]. I.e.totalis equal to .

As an example with an array that holds , think about how before the iteration where , total is 0 (because the sub-array is empty). Before the iteration where , total is , which is the sum of elements in the sub-array . Before the iteration where , total is , which is the sum of elements in the sub-array . Before the iteration where , total is , which is the sum of elements in the sub-array . Which also means that when , our program has effectively computed the sum of the entire array!

We call this statement that is always true throughout the run of the program as the invariant. So how do we justify the statement?

Here’s an inductive proof that does that.

-

(Initialisation) When , the subarray is empty. There are no elements, and thus the sum of an empty array is . Since is , so the invariant is true before the iteration where .

-

(Maintenance) Assume that at the start of the iteration (where starts from ), is the sum of elements in the sub-array , or .

Then, during the iteration, is updated to . Thus, by our assumption, the new updated value of is .

-

(Termination) The loop terminates when . Thus, , which is the sum of the entire array.

Here’s the intuition behind why this proof works: We’re saying that assuming it maintained our invariant before the iteration, we just need to maintain the invariant so that it is still true after the iteration. Since when the loop ends, , the invariant helps us argue that total is the sum of the entire array.

Let’s do two other examples to demonstrate this idea.

Example 1: Finding Minimum in an Array

Let’s say we wanted to tell if a value is an array, we could also write it like this:

def find_min(arr):

value = arr[0]

for i in range(1, len(arr)):

value = min(arr[i], value)

return valueThen, to prove this is correct, we will use the following invariant:

At the start of the iteration,

valueis the smallest of elements in the sub-arrayarr[0..(i - 1)].

-

(Initialisation) When , the subarray has a single element. Since

valueis set to , the invariant is true. -

(Maintenance) Assume that at the start of the iteration (where starts from ),

valueis the smallest of elements in the sub-array .Then, during the iteration,

valueis updated to . Thus, by our assumption, the new updated value ofvalueis . -

(Termination) The loop terminates when . Thus,

valueis set to , which is the minimum of the entire array.

Pictorially, here’s how the maintenance is done.

Example 2: Selection Sort

Let’s try something a little more involved, let’s re-visit the sorting program at the beginning, and prove that it actually does correctly sort.

def find_min_index(arr, starting_idx):

min_index = starting_idx

for index2 in range(starting_idx + 1, len(arr)):

if arr[min_indx] < arr[index2]:

min_index = index2

return min_index

def sorter2(arr):

for index1 in range(len(arr) - 1):

min_index = find_min_index(arr, index1)

arr[min_index], arr[index1] = arr[min_index], arr[index1]Let’s assume that find_min_index correctly finds the index of the minimum element in the sub-array . Then we just want to prove that sorter2 is correct as a sorter.

So what should our invariant be?

So the idea is that since is sorted, we should try to find the item that belongs in the slot, which happens to be the smallest element in the sub-array .

At the start of the iteration, the sub-array is sorted and contains the smallest elements of the entire array .

So let’s look at the proof:

-

(Initialisation) When , the subarray is empty. Therefore, it is “trivially” sorted and contains the smallest elements of the entire array.

-

(Maintenance) Assume that at the start of the iteration, the sub-array is sorted and contains the smallest elements of the entire array .

Then at the iteration, let the smallest element of be called . Now, by our assumption:

(1) Since has the smallest elements of the array. We can say that . So if we put in the position, is sorted.

(2) Furthermore, since is the smallest element of , if we put in the position, now contains the smallest elements in the array.

-

(Termination) The loop terminates when . Thus, by our invariant, is sorted.